Robust Human Body Skeleton Detection and Human Motion Recognition

09 November,2023 - BY admin

09 November,2023 - BY admin

Robust Human Body Skeleton Detection and Human Motion Recognition

Human-Robot Collaboration (HRC) allows human operators to work side by side with robots in close proximity where the combined skills of the robots and operators are adopted to achieve higher overall productivity and better product quality. Under the concept of human-centricity in Industry 5.0, the robots should possess comprehensive perception capabilities so that the mutual empathy can be realized in the HRC assembly. Therefore, KTH has been working on the real-time perception of human operators in terms of the skeleton joints and ongoing motions.

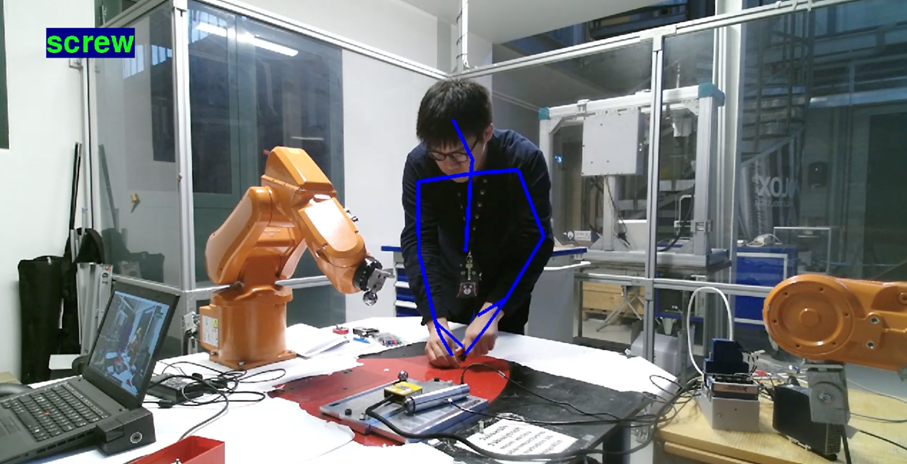

For the human skeleton detection, a Kinect V2 sensor is involved that consists of a RGB camera, a 3D depth sensor, and multi-array microphones. A total of 25 body joints are detected based on the calculations from RGB camera and 3D depth sensor. Multiple persons in the field of view can be simultaneously analysed. For each detected body joint, the result includes the 3D cartesian position and 3D orientation with respect to the Kinect V2 coordinate system, as well as the tracking state and restriction state. The Kinect V2 sensor is calibrated first in the HRC system and the obtained position is normalized. To further enhance the smoothness and consistency of joint positions in successive snapshots, the Unscented Kalman Filter (UKF) is implemented upon the original trajectory of values.

On the other hand, the human motion recognition module covers multiple data modalities (human body skeletons, RGB video frames and depth maps) and a deep learning approach. The skeletons directly reflect the topological movements of human body and the human intentions. Meanwhile, RGB video frames can capture the context information that is not encapsulated in the skeletons, such as the interacting objects, the used tools, the workbench, etc. The depth maps further represent the distances in space, thus avoiding the confusion of similar motions due to camera perspective. Spatial-Temporal Graph Convolutional Networks (ST-GCN) and Inflated 3D ConvNet are employed respectively to learn the spatial-temporal patterns from the multiple data modalities.

Get the latest news on ODIN right to your inbox!

Newsletter Permission: The ODIN project will use the information you provide in this form to be in touch with you and to provide updates and news. Please let us know if you would like to hear from us:

ODIN newsletter: You can change your mind at any time by contacting us at info@odinh2020.eu. We will not distribute your email address to any party at any time.

Comments (0)